Gender bias in data and technology

Anthony Giddens Professor of Sociology

- In the era of early computers, just after World War Two, lots of programmers were women, but they were hidden from history.

- The data that is going into a lot of the latest technologies is embedded with all kinds of bias – such as the algorithms used in recruitment.

- Facial recognition technologies identify white male faces much more easily than dark-skinned female faces, because they’re trained on white faces. If the training data is biased, then the outcomes will be biased.

- The notion that all social problems have a technological solution is the central idea of the Silicon Valley companies. It’s very important that we resist that kind of technological determinism.

Programmed to think that tech is a male preserve

I’ve been working on gender and technology for many, many decades. I was originally interested in why the workforce is very gendered, why some kinds of work are seen as appropriate for women and some kinds of work are seen as appropriate for men. When I started doing this work, it was very clear that women were trained much more to be nurses and teachers, and men were trained to do technical work, engineering work, industrial work. This notion of masculinity being associated with scientific, technical, industrial work had a long history. This was transposed in the computer era so that computer programming, quite early on, became a male preserve.

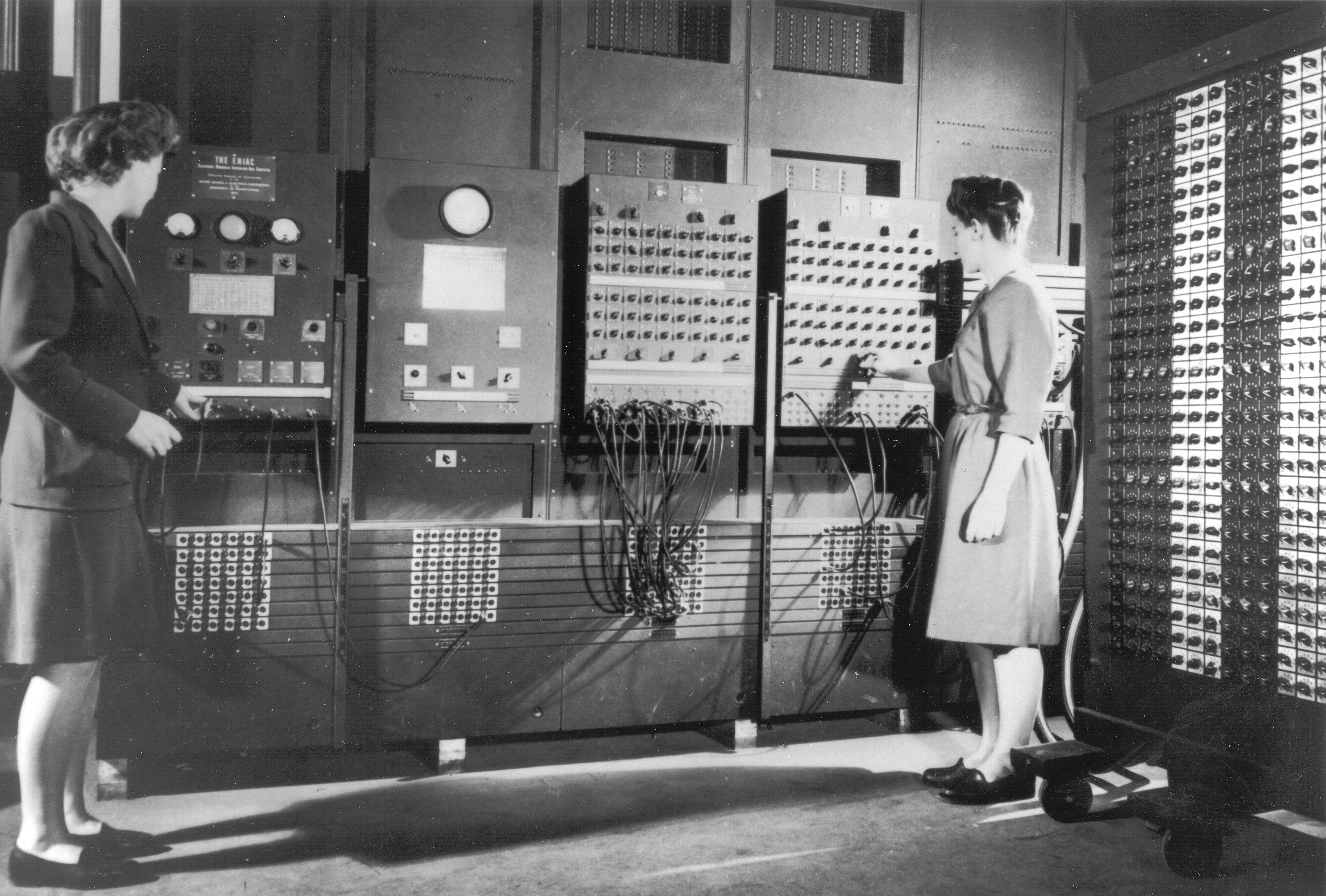

By doing some historical work, I realised that this hadn’t always been the case for the early computers – those huge computers that take up a whole room that you see just after the Second World War. There were lots of women programmers in there, but they had somehow been hidden from history. Somehow, the contemporary notion was that physics, maths and computing were very much a male preserve.

I remember reading Sherry Turkle’s early work on MIT hackers, as she called them – a culture of engineers who were guys who worked all night and ordered pizza at 3 am and who were very hooked on their machines. In more recent years, I’ve been interested in how it is that if you look at conferences of roboticists, people who work in artificial intelligence, and now machine learning, we find that these areas are very male-dominated.

Two women operating the ENIAC’s main control panel while the machine was still located at the Moore School. Wikimedia Commons. Public Domain.

How gender relations shape technology

One of the main things I’ve been concerned with during my career has been the extent to which these inequalities and differences in where women and men work actually affect and shape the kinds of technology we get. And if there’s anything I’ve tried to argue, it is about how gender relations actually shape technology. An example I often use with my students is looking at the history of contraceptive technologies and why they were designed for use by women rather than men, why we still don’t have a male pill, and why we have decades of women taking the pill. I deconstruct for them that this isn’t actually to do with biology. It was to do with particular choices being made about who the suitable subjects for contraceptive technologies were.

Think about search engines and Wikipedia, which I actually did some work on. Billions of people use it as if it were an encyclopaedia of objective scientific knowledge. Yet, if you look up physicist, you find a lot of male physicists on there, but very few female physicists. There was this classic case about 10 years ago when people would type in Did she invent something? and the auto-correct would assume it meant Did he invent something? Now, there are quite a lot of hackathons and projects.

Once a year, on Ada Lovelace Day, here in Britain, there’s a project to increase the number of entries of women scientists, women physicists. It’s been the same in terms of whiteness and race. There are all kinds of ways in which the knowledge that we get in search engines reflects who is doing most of the inputting, who’s doing most of the editing of those things. That has been a very important project.

Portrait of Ada Lovelace. Wikimedia Commons. Public Domain.

Bias in algorithms

I’m currently very interested in artificial intelligence automated decision-making: the way in which the data that is going into a lot of the latest technologies is embedded with societal, gender, racial and cultural biases. Let me give you one example, which is the increasing use of algorithms in hiring and recruiting. Again, there have been some very good studies recently showing that somehow the assumptions that the best qualified worker for many kinds of occupations is a white young male who hasn’t had any breaks in his career. This gets reproduced in these technologies so that when the automated recruitment tool is going through leaves, when they identify any gaps in education or in career, those people are thrown out. You can see the ways in which this will impact anyone who’s had a slightly divergent career, and particularly, for example, mothers who’ve taken time out of their careers.

Machine learning systems and inequality

There’s a lot of work now on facial recognition technologies and the way in which these technologies identify white male faces much more easily than dark-skinned female faces, because they’re trained on white faces. If the training data is biased, then the outcomes will be biased. This is the case with the discussion about using automated decision-making for policing environments, for example in criminal justice.

These automated technologies are presented to us as if they are more objective than human beings and that human beings, like judges, are prejudiced. So, if we can somehow automate these decisions, we’ll have fairer, more objective decisions. I think it’s very important to critique this idea. Like previous technologies, these technologies arise in particular social contexts. They’re fed data that reproduces the kind of inequalities that exist in society, and then they get reproduced and sometimes even amplified in these machine learning systems.

Sleep is for other people

One thing I want to strongly stress, from my year in Stanford, is how much these big tech companies insist on a 24/7 model of work. It makes it very hard for anybody except young people who don’t have any caring responsibilities or who don’t want to live a normal life, to survive in these companies, which appear to be campuses and have gyms and lots of food in them. Everything is done to encourage people to spend their lives within those four walls. It’s a kind of model of work; an intensity of work that has this ideology behind it, that somehow, unless you work day and night, 24/7, you’re not going to make the genius breakthroughs. Somehow, being completely manic and work-obsessive is the only way to be the genius to make the breakthrough. It’s a particular kind of model of work that can only be adopted by particular kinds of young men who are willing to adhere to that lifestyle.

How Silicon Valley shapes our view of the future

What’s really important is the way in which Silicon Valley has captured our imaginations in terms of what technology is and what it can be. We talk about this in terms of the future: socio-technical imaginary. How do we imagine what the world’s going to be like? How do we think about our utopias now? How much do we think about futures in terms of technology and how much do these companies take up that space? This is being driven by commercial concerns, rather than considering slowing down and thinking about the social problems and to what extent technology is appropriate for solving these problems.

Aerial view of Apple campus building in Silicon Valley. By Uladzik Kryhin.

Technology can’t fix everything

One of the things I’ve been interested in over the past few years is the discussion about having robots for care. What aspects of care can they cover in nursing homes for the elderly? What aspects do we want automated? What aspects do we want robots to look after and what are the aspects that we don’t think technology is appropriately applied to? Evgeny Morozov and lots of others talk about this as a technological solution, but the notion that all social problems have a technological solution is the central idea of the Silicon Valley companies. I think it’s very important that we should resist that kind of technological determinism, that technological solutionism.

A more imaginative future for gender and technology

I’ve been arguing for a long time that there is a direct link between how technologies are designed by a very small segment of the population, and that if we really want to think through different kinds of technologies for different needs and to be more imaginative, that we need to have a much more diverse workforce. It seems that if we had a workforce design technology that reflected society more largely, then we’d have a lot more ideas and experience to draw on.

We might think of much more effective technologies for lots of different problems. One of my old slogans used to be that we can’t leave engineering to engineers. This notion that engineering is a simple technical tool and this old division between humanities and social science, and so-called “hard science”, is really broken down. There’s much more recognition that technologists need social scientists to work with them in order to produce technologies for the good of us all.

Discover more about

the past, present and future of inequality in technology

Wajcman, J. (2004). TechnoFeminism. Polity.

Ford, H., & Wajcman, J. (2017). ‘Anyone can edit’, not everyone does: Wikipedia’s infrastructure and the gender gap. (PDF). Social Studies of Science, 47(4), 511–527.

Crawford, K. (2021). Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence. Yale University Press.