Lovely experiments have been done in babies as young as six months old showing that when they are moving their eyes to see targets on a screen, if that target does not appear because they have moved their eyes in the wrong direction, you get a brain signature that is associated with making errors in adults. We think that that is showing the first signs of intact circuits for self-monitoring in babies, quite early in life. There is a disconnect between the emergence of those initial signs of self-monitoring and when young children gain the capacity to explicitly self-reflect, which comes along later in life, around the age of four or five.

Metacognition beyond humans

Professor of Cognitive Neuroscience

- Infants show early brain signals of self-monitoring, but explicit self-reflection emerges only around ages four to five and is shaped by social and cultural learning.

- Many nonhuman animals, from dolphins to primates, exhibit metacognitive behavior, though debates remain about how to interpret these tests.

- Large language models already track their own confidence for simple tasks, yet whether they build a richer third-person self-model comparable to humans is still unresolved.

- Because AI systems rarely expose their internal confidence, people tend to over-trust them, highlighting the need to make uncertainty transparent for safer, more calibrated human-AI collaboration.

Modeling the self

The core of metacognition is having some kind of self-model, a model of the world that includes ourselves in it — our capacities, our skills, the actions that we are taking. The building blocks of that self-model can actually be quite simple. We think an important component of that is the ability to track uncertainty about different sources of information that are driving our decisions. We know that many other animal species can form a sense of confidence in their actions; they can monitor when they make errors on simple tasks, so we think that is a very widespread capacity. That is something that also seems straightforward for artificial systems to emulate. What is perhaps more distinct about human metacognition is that it also involves a third-person perspective on ourselves. We can know things about ourselves in the same way that we know things about other people. We can represent ourselves as having a certain set of traits or characteristics, and we think that that is unlikely to be shared by other animal species — perhaps our closest primate relatives, but not much further than that. Whether that ability for a third-person perspective on ourselves as agents in the world is also something that artificial systems can emulate is an open question. I think there is evidence that artificial systems, such as large language models, can form sophisticated representations of other minds; they exhibit remarkably sophisticated theory of mind. Whether they are also then doing that to themselves and to their own processes is still very much an open question.

Self-monitoring in infants

© babiesandlanguage.com

© babiesandlanguage.com

Pre-reflective childhood phase

It is quite striking. You can have a child who is two or three years old, who is verbal, walking and talking and doing lots of interesting things, and yet they may not yet have the capacity to introspect and reflect on what they know and what they do not know.

© Pexels

© Pexels

We think this is because those early neural signatures of self-monitoring are only providing the initial building blocks of a much richer self model that then gets shaped and formed over many years and may even involve an aspect of cultural learning. We may actually need to be taught how to become self-aware by our parents, our teachers, our family members. This is something that parents will know well; when a child is in a particular emotional state, for example, they are crying, then the parent might come in and provide the language that the child needs to understand why they are feeling the way they are feeling. They might say, you are hungry, or, you are tired, and this can provide the teaching signals that the child might need to start interpreting their own mental states in a way that then gives them fully fledged metacognition.

Studying cultural influences

It is very hard to gain experimental evidence for cultural acquisition of metacognition, partly because it is rooted in naturalistic interactions in real-world settings. What we would need to gain that evidence are studies that examine child development longitudinally and ask whether exposure to certain caregiver interactions earlier in life predicted the emergence of metacognition later in life. Some of those studies are being done, but the challenge comes with the measurement of metacognition, which is in itself a complex thing to do, especially in young children. One real challenge in that area is that you can often see spurious differences in metacognition that might be a consequence of the child having better memory or better problem-solving ability, and that has nothing to do with their capacity for metacognition. Whenever we are studying metacognition in the lab, whenever we are trying to measure it, we always need to be mindful of the importance of controlling for confounds that might be introduced by variation in other aspects of cognitive performance.

Metacognition in animals

There have been ingenious tests developed to track metacognition in animals, and these involve developing nonverbal analogs of human metacognition tests. In a human metacognition test, we might ask someone to rate how confident they feel in making a particular judgment, or to recognize when they have made an error on a particular task. We can develop similar analogs of these metacognitive judgments for nonverbal animals by training the animal to bet on whether their own performance is successful or not. This is known as post-decisional wagering.

© Pexels

© Pexels

It involves the animal completing a particular task, such as remembering some information, and then, after they have remembered that information, they will be asked to bet on whether they think they will be likely to answer the question that is going to be posed to them. What is remarkable is that, in many species that have been tested using this metric, they show rich signs of being able to monitor whether they are right or wrong on a particular task, being able to form a sense of confidence about whether they perform well or badly without being told.

Dolphin uncertainty recognition

One of the early experiments in the animal metacognition literature was carried out by John David Smith with a dolphin named Natua, and the experiment was set up so that the dolphin was trained to distinguish between two different tones, two different sounds of different pitch.

© Pexels

© Pexels

Once the dolphin was trained on being able to distinguish these two different sounds, the experimenters gave Natua a third button to press when he was unsure about what the answer was. This was known as the opt-out response. He could opt out of making any decision at all when he was not sure what the answer was. When they then introduced an intermediate sound, one that was halfway between the other two, Natua started pressing this third button as if he were not sure how to categorize that sound. When humans are given that same task, that is exactly the kind of behavior they exhibit, too. One inference, then, is that the reason Natua is using that third button is that he is recognizing a lack of knowledge. He is recognizing that he does not know the answer, and he is acting on this feeling of uncertainty or low confidence in his understanding of the task to opt out of the decision. That is one behavioral measure we can use to start getting evidence about metacognition in other animals.

Other animal competence

Nonhuman primates, macaque monkeys and chimpanzees show similar signs of metacognitive competence. In fact, the ability to pass these tests of post-decisional wagering is a more strict test of metacognition, because there has been controversy about these opt-out tasks (the ones that were used originally in the experiments with Natua), since tasks using the opt-out response are ambiguous as to whether they actually involve metacognition or whether they can be solved using first-order representations of the world. The idea here is that the animal might press that third button not because it lacks confidence in its response, but because it has learnt that pressing that third button is the appropriate thing to do when presented with a stimulus that is halfway between the other two. Tasks involving the opt-out response are therefore ambiguous in how we interpret them, but more recent tasks developed using post-decision wagering are more secure in isolating metacognitive processes.

AI self-awareness progress

Some of the building blocks of metacognition, such as the capacity to track confidence in a variety of representations about the world, seem relatively straightforward to implement in artificial systems. Large language models like ChatGPT predict the next word in a sequence, and along with that prediction comes a range of probabilities about which word will be most appropriate. In one sense, baked into the architecture of these models is a sense of confidence in whether their answer is correct or not. A variety of researchers have probed whether those confidence estimates that are internal to the system indeed reflect something about the probability of that answer being correct, and, at least for simple questions, the newer versions of ChatGPT and related systems are well calibrated; they have good metacognition about whether they know or do not know the answer.

© Pexels

© Pexels

On one level, the types of building blocks of metacognition that we see are widespread in the animal kingdom and emerge early in life in infants, and they are likely already instantiated in artificial systems. What is more unclear is whether this broader capacity that develops later in children — that comes online around the age of four or five and continues to develop and be shaped by our cultural environment right into adolescence, and that enables us to take a more third-person perspective on ourselves and start representing our skills and capacities in the same way that we might represent other people — is being captured by current AI systems. This broader aspect of human metacognition, this late-developing aspect, whether this is being captured by current AI systems, is still very much an open question.

Metacognition for AI social interaction

AI systems are already participating in meaningful social interactions. People are using them for therapeutic purposes, for friendship, for advice, and I think this is only going to continue to grow. One thing we know about the benefits of metacognition for enabling productive social interaction in humans is that being able to share fine-grained estimates of whether we think we have a full picture of a situation, whether we are not so confident in our knowledge, whether we need to seek advice, this is really the glue that enables effective collaboration in broader teams. Work by my former mentor Chris Frith has shown that when people are interacting with each other, they naturally share information about how uncertain or how confident they are in their views, and this is important for them coming to a consensus opinion about the problem at hand. At the moment, at least, many of these AI systems do not have their metacognitive estimates on public display. As I mentioned earlier, these confidence estimates are hidden inside the system. They might be well calibrated, but they are not often being explicitly communicated to the user. One challenge here is to reduce this gap between the internal workings of the system, which might well have well-developed metacognition, and how that is then communicated to the person interacting with that system.

Risks of overestimating AI

One danger is that people over-attribute competence or confidence to the views of these systems because their internal probabilities are not on public display. We have shown in our lab in recent work that people have these illusions of confidence in AI systems. They are more willing to trust them for advice about a variety of topics than they are to trust a human who is giving identical advice.

© Shutterstock

© Shutterstock

Studying AI like brains

It is possible to study the brains of artificial systems in a very similar way that we can study human or animal brains, and, in fact, they are even more accessible, because we know every element of their workings. Labs in AI research are developing techniques where, just like we can image the brain at work, we can take the individual neurons or individual units that make up these very large neural networks and visualize their activity. We are able to probe the different layers of the neural networks that make up these systems with a very high degree of precision. One success story has been the demonstration that artificial neural networks trained to categorize visual images end up developing similar internal representations to those found in the primate visual system accessed by recordings from neurons in a biological brain. There seems to be a very close mapping between the types of computations that these artificial systems are discovering for themselves that help them solve a particular problem, like classifying images, and the types of computations that the brain itself might be using.

Misconceptions about AI minds

There is a desire in the field of cognitive science to want to think about AI systems in the same way that we think about the mind and vice versa. There is a desire to try and characterize what an AI system does, like ChatGPT, in terms of whether it has language, whether it has memory, whether it has theory of mind and so on.

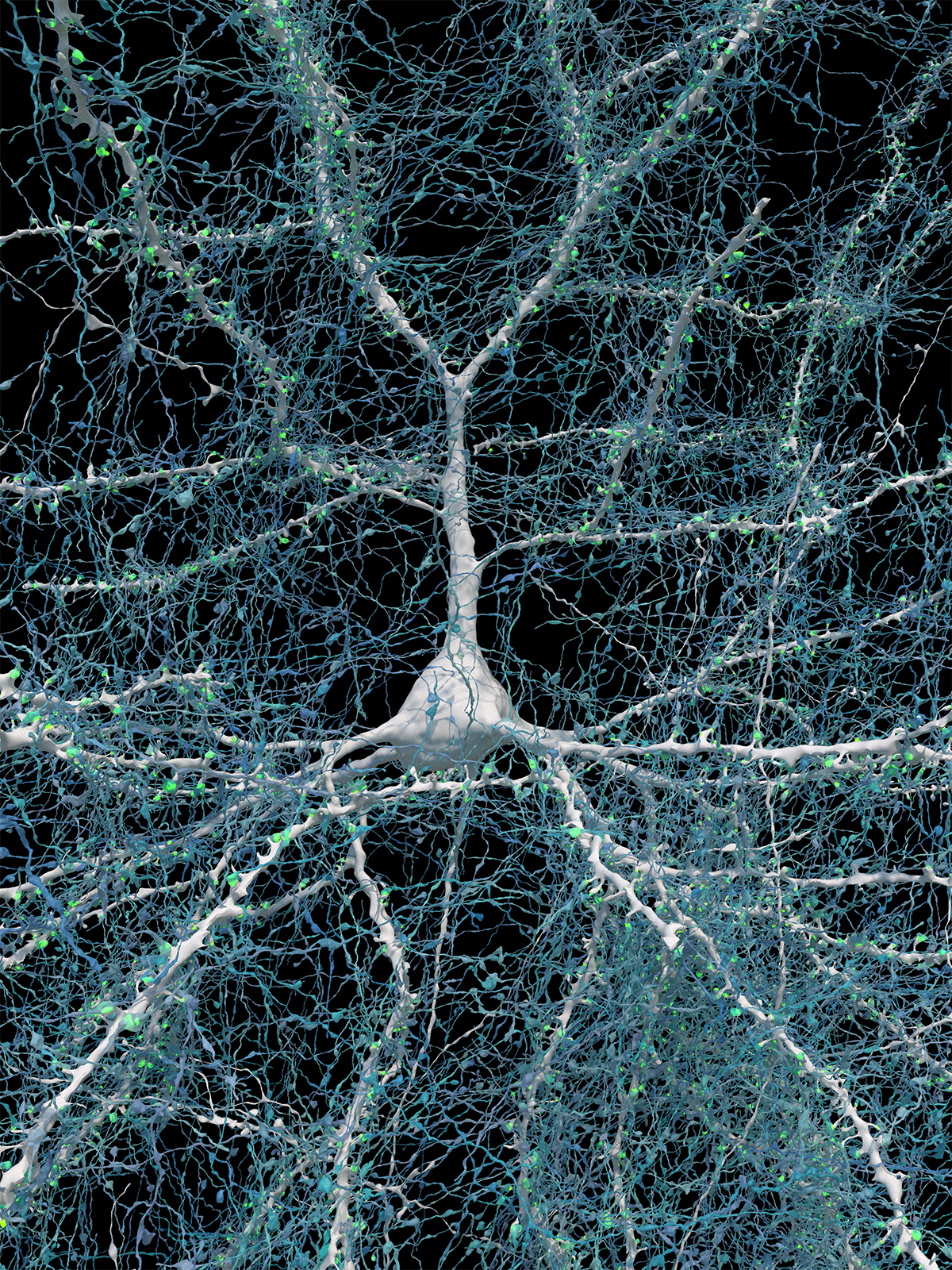

© Google Research & Lichtman Lab, Harvard University

© Google Research & Lichtman Lab, Harvard University

There is rapidly becoming a limit to the extent to which we can translate our psychological concepts from the study of the human mind over to artificial systems that are going to diverge in very interesting ways from the human or animal case. A nice analogy here is between studying the biology of flight in birds and the engineering of flight in an airliner or an airplane. They both achieve flight, the capacity of flying, but they do so in very different ways, and it would be somewhat odd to ask whether the plane is flying in the same way as the bird. The aerodynamics might be somewhat similar, but there is going to be a limit to the level at which it makes sense to pursue a deep explanation of the systems involved in flying in the two cases. There is going to be something similar that limits us in forming deep analogies between the human mind and the minds of artificial systems. The minds of artificial systems will be real minds in the sense that we interact with them as if they have minds, but they will not be minds in the same sense as we are used to interacting with in the human case.

Editor’s note: This article has been faithfully transcribed from the original interview filmed with the author, and carefully edited and proofread. Edit date: 2025

Discover more about

metacognition

Fleming, S. M. (2021). Know thyself: The science of self-awareness. John Murray Press.

Fleming, S. M., & Lau, H. C. (2014). How to measure metacognition. Frontiers in Human Neuroscience, 8, Article 443.

Fleming, S. M., Dolan, R. J., & Frith, C. D. (2012). Metacognition: computation, biology and function. Philosophical Transactions of the Royal Society B: Biological Sciences, 367 (1594), 1280–1286.

Fleming, S. M., & Dolan, R. J. (2012). The neural basis of metacognitive ability. Philosophical Transactions of the Royal Society B: Biological Sciences, 367(1594), 1338–1349.

Smith, J. D. (2010). Inaugurating the Study of Animal Metacognition. Int J Comp Psychol. 2010;23(3):401–413.

Dennett, D. (1993). Consciousness Explained. Penguin.