Inside the brain: the challenging use of technology in neuroscience

Neuroscientist

- Studying brain injury allows us to come to conclusions about the functioning of the brain.

- The big breakthrough in the last 50 years has been imaging inside the brain, with the arrival of MRI.

- The key is to find ways of getting signals from inside of getting signals from inside the head without invasively inserting anything into it, with the help of techniques like fMRI and EEG.

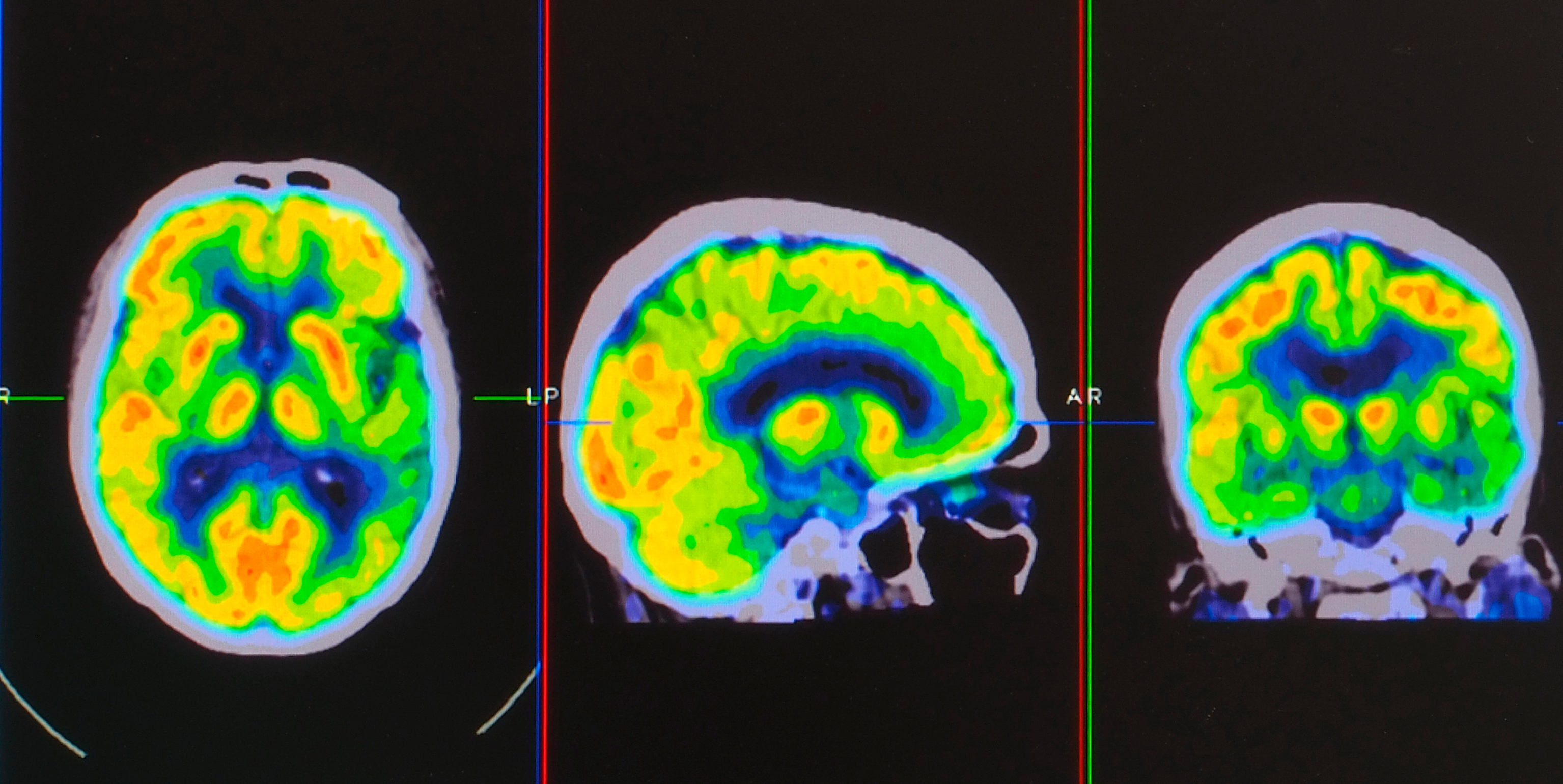

Seeing inside the brain

Photo by sfam_photo

The role of technology and techniques in neuroscience is absolutely essential, but it’s also challenging. When we interact with one another, we’re building images of what’s going on inside the heads of the people around us, and that’s very powerful as a story we can tell. Interestingly, we’re also building models of what’s going on inside our own heads. Introspection is a fundamental part of being alive, but we can do better than that. In neuroscience, especially, we have a myriad of techniques that allow us to look at the actual biological function that’s going on and, interestingly, what we think is happening in our heads is a very unreliable guide to what’s actually going on.

Early theories...

Aristotle, who was right about many things, thought that the brain was a radiator, a device for exchanging heat, as you might have at the front of your car or on the wall of your house. The reason he thought that was because he observed this finely wrinkled structure of the brain. He also saw that there were many blood vessels. In fact, for a radiator, that’s what you’re looking for – a large surface area and many pipes.

In medieval times, people observed the structure of the brain more deeply as a result of the anatomical study of the deceased. When the knife was invented, one could open up the skull of the deceased, slice up the brain and look at the structure of it even without a microscope. Curiously, what medieval researchers noticed was that we have areas inside the brain which are full of fluid. The prevailing theory then was that these – what we now know to be gaps in the brain – were where the thinking was done and what we call the cerebrospinal fluid is part of the sealed system of the brain that goes down into the spinal cord. The original theory was that thinking was done in these ventricles, which contain fluid, and vibrations then travel down the spinal cord and allow us to move. None of that is true.

Studying damage

Historically, we could observe the structures of the brain very well, post-mortem. But once the brain was damaged in a living person, they didn’t generally live long, so it was difficult to correlate any detail about the structure of the brain with a living person. In fact, it was high-velocity bullets and antibiotics that made the real difference in the 20th century. If a high-velocity bullet enters your brain and comes out the back, it doesn’t necessarily destroy everything around it, but it can take a small part of your brain out. If the person lives, with the help of antibiotics, we can make a course map of the brain from the injury that allows us to come to conclusions about the functioning of the brain. If they’re missing part of the back left side of their head, for example, and it turns out that they can’t see things that are to the right in front of them, we might think the back of the head on the left does the seeing on the right. It’s like a mirror image from a camera. Damage, therefore, has always been important in the study of the brain.

As we’ve started to be able to make images of the brain, in ways that I’ll describe, we can also learn from people with strokes. Injuries that are not caused by an external hit, but internally, allow us to see what damage has been caused and correlate that with what they do in their lives. If you look at the brain of someone who’s had a stroke after they’ve died, you can go down to a microscopic level and understand which bits of the brain were damaged in great detail.

New Machinery

© Photo by plo

The big breakthrough in the last 50 years has been imaging inside the brain. X-rays are good for hard matter. If you break your arm, you’ll want an X-ray. But the key insight in terms of structure was a machine called MRI, which stands for magnetic resonance imaging. It was built as a spectroscopic technique. Spectroscopy looks at the range of energies that are in a material, so if you get a substance from Mars and you want to know what it is, you can put it in a spectroscope and it’ll tell you which chemicals are inside it. It turns out, though, that water interferes with that magnetic resonance process. Magnetic resonance imaging allows you to see the density of water in a sample, so if you break your leg, you’ll have an X-ray, but if you mash up your knee, you’ll want an MRI because it tells you where the soft, squishy stuff is. We can get very detailed images of the structure of the brain, therefore, because the density of water tells you where the different tissues are. Not only does it allow us to see inside the brain and its structures, but when people have damage, we can see precisely where it is, and that allows us to correlate that with their function. These, however, are still images.

Can we learn what’s going on dynamically in the brain?

With the right ethical controls, you can insert an electrode into an animal’s brain and you can record from nerve cells. As a side note, if you’re recording the firing of neurons, you use your ears, because neurons are firing patterns. The best way to detect those patterns as a scientist is to listen in, because your ear is exquisitely tuned to the rhythms of things. So in the lab, you connect the electrode to an amplifier and a loudspeaker. Generally speaking, we don’t insert electrodes into the brains of humans, even with ethical guidelines, although during surgery you can.

The key is to try and find ways of getting signals from inside the head without invasively inserting anything into it. There are basically two techniques for doing that. In a sense, it’s almost like the Heisenberg uncertainty principle: in physics, you can detect where a particle is or how fast it’s moving, but you can’t see both at the same time. There’s not a quantum effect, but it’s the same process. We have one set of techniques that allow us with exquisite precision to tell when things are happening, and another set that allow us to tell where they are happening.

Time maps of the brain

Photo by Microgen

The first are mostly electrical recording techniques, for example, where we stick electrodes onto the scalp and we record the electrical activity. We can tell very quickly if there is a set of neurons firing because the electrical conduction speeds are very fast. In other words, we can tell precisely when the brain notices something. We call this technique “EEG”, which stands for electroencephalography. It allows us to give very fine time maps, but we don’t know where in the head the activity that we’re recording is coming from. It’s similar to listening in to a cocktail party at the door: you can hear voices, but you can’t tell whether it’s somebody talking quietly near the door or somebody talking loudly far away, and you’ve certainly got no information about whether they’re on the left hand side of the room or the right hand side of the room. EEG is very helpful in terms of time, but not in terms of space.

Spatial maps of the brain

Photo by Yok_onepiece

To get spatial mapping of functional activity, we often use a technique called fMRI. These are most of the brain scans that you’ll see in the newspapers, because neuroscience is extremely popular and we’re very seduced by beautiful images of what’s going on inside our heads. Using this fMRI technique, we can identify an area, for example, that lights up more strongly when you fall in love, or we can locate an area involved in psychological stress. How does that work? Basically, the technique relies on oxygen. Oxygen is the chemical that’s necessary for the functioning of all cells and, interestingly, the brain has very low reserves of oxygen, so it relies on the blood supply to constantly replenish the oxygen when the brain is functioning. If you spy on the oxygen, you can tell when it’s more active.

For example, during lockdown, we find that city centres are much quieter. Districts around the edges of the city are busier. How could a Martian tell that? If you looked at food deliveries – of, let’s say, bread or water – to the supermarkets in the very centre of town compared to those in the periphery, you would know that there’s less human activity in the centre of town now because there’s less bread and milk and water being delivered. By spying on the energy supplies of the brain, we can tell which bits of the brain are active. That’s how brain imaging works.

The supermarket metaphor

Firstly, you may have a supermarket on every street corner, but you don’t have a supermarket for every person in the city, so your view of the city is blurred. You can only see as much detail as there is in the supply network. In particular, if there is an increase in supply in a fine network of blood vessels, you can’t tell which neurons are driving that increase. It may be any one of a million neurons that are supplied by a particular tiny blood vessel – that you can tell. Some of those are more active but not all within that area.

The second problem – and this is more fundamental even – is of time. Just like with supermarkets, there is a lag between the activity of the brain and the resupply of oxygen to replenish the energy stores that were being used. That lag is at least a tenth of a second. But, actually, for most of the techniques we’re using, it’s around a second or two. There is a delay between the activity that we’re looking at. When we make these images of the brain that are so seductive, we’re unable to simultaneously see where it’s happening and when it’s happening, so our models have to bridge between these two levels of uncertainty, as we test our hypotheses of what’s going on inside the head.

A future of artificial intelligence?

With the hundreds of labs around the world using an ever-increasing array of technology to see what’s going on inside the heads of humans and of animals, we’re gathering vast amounts of data and, broadly speaking, there are two sets of things we can do with this. We don’t have a grand theory of the brain that we could prove or disprove, but maybe this data will allow us to elaborate theories to test simple hypotheses in the scientific manner that’s possible. We could, however, run a simulation because we know so much about the detail of the anatomy and the functioning of the brain. We could build a simulation on a computer of the functioning of the brain and test our hypotheses on that simulation. Maybe these simulations would even allow us to build an artificial intelligence.

Sadly, this is not the case – at least not yet. There seem to be two different problems. One is that we don’t know what difference makes a difference. We do know that there are scores of billions of neurons in the brain and that each of them makes thousands of connections. In fact, we know that to capture even a single cell’s direct anatomy and its functioning would use up a huge amount of computing power. It may be that if we could completely simulate in some unimaginably huge computer everything about a set of neurons, we would know what was happening in the brain.

However, it seems implausible that all of these differences are critical. It seems that we must abstract the neural processes into slightly higher level ways of understanding what’s going on in the head, perhaps using a simple model of a single neuron without its exquisite detail and anatomy and put these networks of neurons together. To do that, we need to understand what’s important about the functioning of one neuron in the system and how we can extrapolate from that to the ensemble. So although we’re gathering enormous amounts of data, our lack of a unified theory and our lack of understanding of what difference makes the difference mean that neurally inspired simulations do not yet seem to lead directly to artificial intelligence.

Discover more about

neuroscience and technology

Calvo-Merino, B., Glaser, D. E., Grèzes, J., et al. (2005). Action Observation and Acquired Motor Skills: An fMRI Study with Expert Dancers. Cerebral Cortex, 15(8), 1243–1249.

Glaser, D. (2017, February 26). Why we must teach morality to robots. The Guardian.